Introduction: The Bedrock AI Revolution

Everyone’s buzzing about Bedrock AI. And there’s a reason for that. This isn’t just another AI tool—it’s rewriting the rules of how artificial intelligence is built, shipped, scaled, and priced for real-world use.

For developers, it’s like getting Formula 1-grade horsepower without needing a pit crew. For investors, it’s the kind of infrastructure bet that turns small firms into market leaders. And if you’re in research or the startup grind, this is your blueprint to leapfrog your competition—without hiring an army of PhDs.

Amazon Bedrock started simple: a way to tap into foundational AI models without the usual ops nightmare. But now? It’s a full-stack command center where you can deploy AI-powered agents, run hyper-specific simulations, and even distill massive models into cheaper clones—without needing a GPU farm in your basement.

It ties directly into what everyone actually cares about:

- Cutting dev time from months to days

- Automating workflows without hallucinated nonsense

- Slashing AI costs while keeping enterprise-grade performance

From programming integrations to emerging tech, this platform isn’t just a nice-to-have. It’s the new standard. The companies using it—from Moody’s to PwC—aren’t playing around. They’re accelerating innovation while their competitors are still trying to scope use cases.

If you’re still debugging legacy ML pipelines… you’re missing the next wave.

The Foundation Of Bedrock AI’s Success

Let’s keep it real. Before Bedrock AI, most businesses were either bootstrapping their AI efforts with open-source chaos or spending a fortune on black-box APIs that didn’t scale. Amazon scoped the pain, and instead of just patching the problem, they ripped it out and engineered something no one else had: a unified, deploy-on-demand AI ecosystem that fits into AWS like Lego blocks.

Here’s how it evolved:

| Phase | What Bedrock Did | Why It Mattered |

|---|---|---|

| Launch Phase | Aggregated access to open and proprietary foundational models | Cut the friction for dev teams building with FM APIs |

| Platform Growth | Added private customization and automated reasoning tools | Enabled vertical-specific workflows and smarter agents |

| Current State | Supports over 50 top-tier models including Claude, Titan, and Stable Diffusion | End-to-end AI deployment under one roof, minus the bloat |

They didn’t stop at model access. Bedrock’s serverless architecture stripped the nonsense from deployment bottlenecks. Got a retail chatbot scaling from 100 to 10,000 users? Bedrock flexes without you touching a load balancer. Hooks straight into AWS Lambda and SageMaker, which means real-time feedback loops, auto-scaling, and lower ops overhead.

But the killer feature? Model distillation.

Why burn cash running a 175-billion parameter LLM just to get a yes/no answer?

Bedrock lets you train a smaller, cheaper, nearly-as-smart clone that runs 5x faster and costs 75% less per inference.

Healthcare teams are doing just that—dropping processing costs from $5.20 to $1.30 per 1,000 queries—and still hitting 98% triage accuracy.

Startups are using distilled models to drop zero-to-prototype time from 90 days to 7.

The way it’s built, the tech isn’t flashy—it’s effective.

No army of super-engineers required.

Bedrock AI In Machine Learning And Automation

Here’s where Bedrock shifts from “tool” to “infrastructure.”

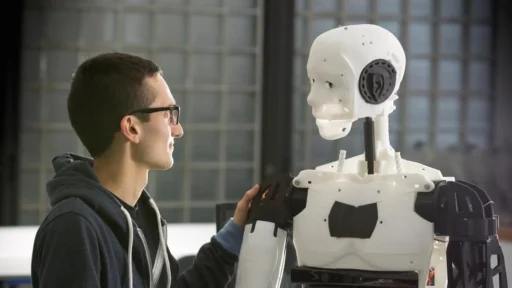

The whole platform is built around autonomous agent teams. Think of them like your in-house departments—except no sick days, no forgetfulness, and no burnout.

You’ve got one agent parsing financial filings, another grabbing regulatory data via Retrieval-Augmented Generation, and another writing clean human-quality summaries… all working together in sync.

That’s exactly how Moody’s uses Bedrock AI to automate analysis of over 10 million credit reports per year. They didn’t just save time—they eliminated 82% of their manual review hours. And with RAG built-in, these agents aren’t just faster. They’re accurate.

Here’s how multi-agent orchestration creates real leverage:

- One agent handles data ingestion while others build useable output, in real time

- No redundant processing, no hallucinated nonsense, no team silos

- Teams cut task cycles by 40%—that’s weeks shaved off enterprise tasks

But maybe precision’s more your game. That’s where Bedrock’s implementation of RAG owns the field.

A legal tech firm used RAG to match contracts with live compliance data. Result? 62% fewer factual errors, and every AI-generated clause gets confidence scores. Now humans review outcomes, not inputs.

And we’re not just talking white-collar use.

Bedrock AI’s automation tools link directly into AWS’s serverless backbone—Lambda, SageMaker, S3—meaning feedback from customer support can trigger real-time CRM updates, inventory changes, or tailored promos.

Forget “prompt tuning”—you’re now scheduling intelligent action.

This is the kind of platform that lets fast-moving industries:

– Auto-classify millions of files with visual grounding

– Tag petabytes in S3 buckets instantly

– Cut image generation costs by 60% for marketing teams

No expensive consultants.

No bloated dev teams.

Just clarity, efficiency, and scaling without stress.

Bedrock AI Platform Analysis

Imagine trying to test three different AI models for your app—but each one requires its own code tweaks, APIs, or even infrastructure. Sounds like a slog, right? That’s the kind of friction Bedrock AI is out to eliminate. Now, developers can switch between foundational models—like Anthropic’s Claude, Meta’s Llama, or Amazon’s own Titan—with a unified API. No deep rewrites. No switching languages or spinning up new backend services. Just plug, test, and go.

This single-interface approach is a game-changer for prototyping. Developers report a 70% drop in iteration timelines because they’re no longer stuck rebuilding pipelines every time they test a new model. A fintech firm, for instance, used Claude 2 for risk analysis and Titan Text for financial reporting—all under one roof. That kind of flexibility used to be reserved for companies with whole AI teams. Not anymore.

When it comes to model customization, Bedrock AI isn’t throwing one-size-fits-all solutions at enterprises. Businesses can now tailor foundational models to match their internal logic, workflows, and data structures—without letting their sensitive data slip into the training black box.

PwC is already customizing models like Titan Embeddings to flag compliance risks in internal documentation. It works securely—thanks to Bedrock’s enterprise-grade encryption and access gates. Everything flows through AWS Key Management Service, and strict IAM policies ensure proprietary data stays under lock and key.

Security isn’t just a compliance checkbox—it’s critical infrastructure. In healthcare and finance, one leak of training data can break federal laws. With Bedrock, firms can confidently train and scale knowing their AI models won’t spill their secrets.

The real power move? Bedrock AI isn’t just one cool platform—it’s deeply wired into the whole AWS ecosystem. That tight integration opens up ways to automate, scale, and optimize almost any workflow.

Here’s where it gets wild: SageMaker can fine-tune image models like Stability AI’s Stable Diffusion XL, slashing up to 60% off visual content generation costs. Meanwhile, S3 and Glacier tag petabytes of unstructured data, shrinking retrieval times from hours to literal seconds. And AWS Lambda triggers Bedrock-based agents to update customer feedback into real-time CRMs with zero manual overhead.

This infrastructure-level hookup means a retail chatbot, for instance, can analyze feedback, restock items, and update product descriptions—all autonomously and at hyperscale. Bedrock isn’t just the model playground; it’s the operating center for AI-native businesses.

Bedrock AI Insights for Developers and Startups

Let’s be real—most startups don’t have hundreds of thousands to blow on infrastructure. Bedrock AI is changing that script. Now, a small five-person team can prototype something meaningful in days, not months. Why? Because Bedrock’s developer tools are actually built for builders, not just big enterprise suits.

Founders using Bedrock can tap into prebuilt pipelines, model fine-tuning options, and scalable APIs without wrangling containers, GPU clusters, or remote storage nightmares. A shared CLI interface and AWS-powered sandboxes allow them to test Claude, Titan, and Nova models as if they’re switching browser tabs.

For startups strapped for time and cash, that flexibility isn’t just nice—it’s existential. Prototype faster, validate quicker, and pivot without facing a dev stack meltdown.

Some startups aren’t just surviving—they’re thriving, powered by Bedrock’s Free Tier. One standout? A new Y Combinator-backed company that built MVP chatbots for legal clinics using the 10,000 free monthly inferences available via Bedrock’s distilled models. They did it without needing outside funding or an ML PhD on staff.

Another example: A marketing AI startup used SageMaker and Titan models to auto-generate personalized campaign copy for DTC brands. They reached $40K in monthly revenue before ever paying for a Bedrock upgrade.

What’s changing is the game itself. Entry barriers for innovation used to be tech-heavy and capital-intensive. Bedrock flips that equation—giving early-stage founders their own playground of enterprise-grade tools with consumer-level pricing.

The investor crowd is watching—and they’re following the signals. VC firms are increasingly backing AI startups that adopt Bedrock as their core infrastructure layer. The bet? Founders who build on Bedrock are runway-efficient, can scale globally faster, and offload infrastructure complexity to AWS.

- Reports from PitchBook show a 32% increase in seed-stage deals for AI-native companies using Bedrock in their core stack.

- Investor memos point to Bedrock’s integration with AWS regions as key leverage for international demands.

- Some VC funds are already tying lower interest rates for convertible notes to the use of Bedrock’s infrastructure-on-demand pricing.

Looking forward, Bedrock’s continued growth isn’t just a product story—it’s shaping the rules for startup viability. With AWS sinking billions into next-gen Graviton and Inferentia chips customized for Bedrock, startups aren’t just getting faster—they’re going greener too.

This isn’t just about better tooling. It’s an infrastructure tipping point. Startups now have a seat at the AI table—and Bedrock’s setting their place.

Pushing the Boundaries of AI Innovation with Bedrock AI

What if your AI model could do more than write memos or answer chat prompts? What if it could help discover a new antibiotic, or test materials faster than any lab in the world ever could? That’s not sci-fi—that’s exactly where Bedrock AI is heading.

Right now, biotech firms are leaning into Amazon Bedrock’s heavy-hitting capability for high-performance simulations. We’re talking high-throughput experiments, molecular analysis, and protein structures analyzed in hours—not months. A real example? One biotech company fused Bedrock’s Claude 2 with Nova to predict protein interactions. Normally a five-year cycle—now shortened to 18 months. No loss in accuracy either.

This isn’t just about speed; it’s about cracking problems that previously just sat on whiteboards collecting dust. Advanced simulations like these are done through what’s called multi-agent orchestration. That means different AI agents handle niche tasks simultaneously—like a research team firing on all cylinders without burnout, breaks, or bias.

Material science isn’t far behind. Labs that once relied on physical prototypes now run thousands of simulations at a fraction of the cost. From flexible solar panels to bio-degradable containers, accelerated R&D is turning ideas into market-ready products faster than traditional trial-and-error ever could.

Here’s the core play: Bedrock isn’t giving you just one supermodel to run every use case. It curates over 50 foundation models, including Nova and Meta’s LLaMA variants. Pick the tool that’s specialized for your result. Physics-heavy simulations use distilled versions of large models—meaning smaller size, less wait time, and lower cost.

You’re not guessing if it’ll work—you’re refining what already works at scale.

Future-Ready AI Agentic Systems from Bedrock

Here’s a curveball most companies aren’t ready for: future AI systems that don’t wait for your prompts—they just act.

Amazon’s Bedrock AI is at the front of building “agentic AI.” Basically, we’re talking about autonomous systems making on-the-fly decisions by themselves. No hand-holding. These are multi-agent teams that can sense, reason, and execute—in real time.

You’ve got early pilots already in action. Supply chain managers are using Bedrock-powered agents to reroute deliveries when a hurricane hits or adjust inventories before ports even report a delay. No human flagged the disruption—Bedrock did.

These agents aren’t brittle. They’re functionally independent systems designed for chaos, built with real-world constraints. AWS ran a logistics trial showing agents reduced shipping delays by 55% without human involvement—because they made decisions faster than any boardroom.

When disaster strikes—flood, fire, outages—imagine having sensors + Bedrock agents acting without you ever lifting a finger. Trigger insurance evaluations, dispatch emergency aid, route materials around destroyed roads. These aren’t “roadmap ideas.” They’re already tested.

What makes this possible? Retrieval-Augmented Generation (RAG) lets these agents pull from up-to-the-second knowledge bases, cross-checking facts mid-task from legal, financial, or scientific sources. It’s not just automation—it’s smart, contextual automation.

Finance and the Power of Bedrock for Risk Analysis

Ask any analyst—it’s not the analysis that drains them, it’s the mindless data dig. Thousands of credit reports, financial disclosures, internal risk assessments. It’s death by spreadsheet.

That’s why firms like Moody’s pushed Bedrock into their core. Instead of stacking humans, they stack AI agents—some extract data from earnings reports, others validate it against real-time filings, and another writes the summary. End result? Report processing got slashed by 82% in terms of man-hours.

Teams aren’t guessing anymore—they’re orchestrating across Bedrock’s API, choosing which model serves what case. That’s compliance you can prove, faster than regulators knock.

And here’s the kicker: by integrating RAG, these AI systems align outputs with regulatory changes. The moment IFRS updates a clause, Bedrock agents immediately reflect it in their validation workflows. That’s real-time legality.

Healthcare Breakthroughs with Bedrock AI

Big Pharma’s got a problem: billion-dollar pipelines with 90% failure rates. Drug discovery isn’t slow because of science—it’s slow because of simulations, models, and re-tests. Bedrock changes that.

AI models powered by Bedrock—like Claude and Nova—have been applied to biochemical modeling. One biotech firm used distilled models (think: same brainpower, less computational cost) and boosted simulation speeds by 300%. In plainer terms—they got what used to be year-long lab data… in days.

- Reduced R&D time means fewer clinical trial delays.

- Cheaper simulations mean small labs can compete with pharma giants.

- Custom models preserve data privacy while still learning on proprietary patient trials.

Now take that approach and plug it into epidemic prediction, vaccine modeling, or even personalized drug responses. Bedrock’s edge isn’t just intelligence—it’s scalability, compliance, and cost control rolled into one.

Using Bedrock AI to Reshape Retail and E-Commerce

Online shops already use AI… but most of it is about as smart as a Magic 8-ball. What Bedrock brings? Real personalization based on actual behavior—minute-by-minute.

One major e-comm player plugged Bedrock’s Titan Text and Titan Image models into their site. The AI rewrote product descriptions dynamically, based on customer demographics, past clicks, even weather patterns. The A/B test? AI-written content boosted conversions by 35%.

That’s real ROI. Not fluff.

Same enterprise saw a jump in customer satisfaction when tailored feedback loops updated in real time using Lambda + Bedrock. A customer leaves a bad review? AI agents spot patterns, recommend stock changes, and even rewrite support responses—auto-magically.

From marketing content to backend logistics, Bedrock makes the e-comm stack tighter, faster, and way more responsive. Developers also win since all models run under one API. Switching out image synths or text models takes minutes, not weeks.

Where Bedrock AI is Headed in the AI Ecosystem

Everyone’s fighting for AI dominance—Amazon, Microsoft, Google. But Bedrock’s carving its edge.

It’s not trying to be “the biggest.” It’s positioned to be the most usable. Over 50 billion inferences a month happen inside this system, powered by models optimized for both power and price.

Where does it win? Energy efficiency with Inferentia3 + Graviton4 chips. Global reach with 32 AWS regions. Free tiers for indie builders. And a serverless setup so startups don’t break budgets scaling.

In a world where most AI platforms sell power, Bedrock sells practicality. And that just might make it the backbone of real-world AI for years.